What is Agent57?

Part 1

In 2012 a team in Alberta, Canada introduced the Arcade Learning Environment (ALE) as a method to help develop artificial intelligence. They created an environment that allowed AI agents to play the simple games of the 1970’s on the Atari 2600 VCS using an emulator (Stella), providing methods to input to the emulator from another program, and to provide the output (screen) or internal RAM of the system as input to another program. The paper goes on to demonstrate several methods to use the ALE to train an AI agent to play games and finally proposes these simple games from the early video computer systems as a way to advance AI. In order to fully generate what might be considered specialized AI (an AI that excels at a single task) the paper selected 57 games as a test.

Some of the games are quite easy, such as Space Invaders which requires shooting objects that slowly scroll across the top of the screen while avoiding being shot (just in case you’re young enough to not remember here’s a video). The targets moved back and forth left and right and lowered one space each time they changed direction. If the player failed to hit them all, they would reach the bottom of the screen and the game was over. The other method to lose was the proverbial “running out of lives.” If an enemy missile hit the player three times, the game ended. Occasionally along the top of the screen (requiring the highest skill at deflection shooting), came another object (the mother ship) worth many more points than the individual, but numerous, lower targets. To play only required a left and right movement and pressing the button to fire. Even as simple as it was, the game contained some subtleties.

These subtle aspects of the game required a different play style to maximize the score (the goal – though for me it was hitting more aliens than my father could.) The player could only have a single bullet on the screen at a time and had to wait until it hit an object or disappeared off the top of the screen. A missed shoot meant a long time waiting, a serious penalty for missing. As the player shot the lower targets they started speeding up until the last one moved so fast it could move down one row in the time it took a missed shot to clear the screen. Good players tended to shoot the lower aliens to the point where hitting one more would cause them to perform the first speed advance then shift to hitting only the mother ship until they aliens reached a point low enough to make it dangerous. This produced the highest score with the least amount of danger. But the game had limits, and even though the aliens tended to speed up over the longer game and start lower and lower, they never exceeded a maximum speed or depth and if a player became so good that they rarely died, it was simply faster to clear the lower targets to achieve maximum score in the shortest period of time. However, becoming that good was difficult since at full speed it challenged the human brain to process all the information (retinal neurons to look at the screen), visual processing to decipher it (visual cortex), thinking and prediction (prefrontal synthesis), and move the fingers in time to react (the primary motor cortex stretching over the top of the brain from one ear to ear.) Some players became very good, but death would eventually come though perhaps only from lack of sleep. This game took no reasoning, only simple prediction, and good hand-eye coordination. Other games were more difficult.

One such game was Solaris. In this game the game universe consists of sixteen quadrants each containing 48 sectors that can contain over nineteen different icons to indicate different missions. Choosing a sector can be a free choice or it might be a requirement such as refueling, repairing, or saving a friendly planet. It contains different enemies based on the contents of the sector. Positioned at the bottom, a readout, shows different icons depending on whether the player is in warp or out and it can be damaged requiring the player to use two numbers at right or left to calculate what it normally visually showed. Complicated? Yeah, and there are components I haven’t even touched on like corridors, a completely different visual screen. The goal: navigate all quadrants (each containing 48 sectors) to reach Solaris. This game not only requires great hand-eye coordination, but both strategic and tactical thinking. Add in that Solaris does have a running score counter (the goal is to maximize it) but points are added to the score only at the end of a large goal, making it difficult for an agent to see a reward for a good action.

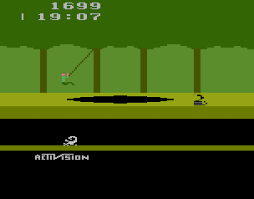

Another game, Pitfall! works completely differently. Here the world is static, it never changes. Players can memorize what each screen contains and even how to best navigate between them. It consists of screens that look similar but navigating them means quick thinking and fast fingers as they are made up of components that seem randomly placed. To win the player needs to navigate to various screens and collect gold and silver bars using memorization. A timer at the top of the screen counts down from twenty minutes. Being a static game, it might seem a great candidate for an agent, but the screens were diverse enough and the map large enough to require a much larger memory than many agents contained (or complexity of the network.) The problem with Pitfall! is playing it is very, very different than most other titles so an agent trained on Space Invaders and Solaris would find it the new environment completely alien. The 57 games picked create diversity and constitute a challenge for a single agent to learn to play. It is important to understand that this is not a suite of agents designed to learn each game but a single agent with the ability to learn all 57 games and play them above a human level.

Why the Atari 2600? It is simple early device. It only has 128 bytes of RAM, no video buffer (programmers drew the screen using the CPU one line at a time using 4 single line sprite objects) and had only 9 inputs for the player (8 joystick positions and a single button.) This kept games simple (for the most part though some programmers advanced the system far beyond.) I remember the 2600 well and it was rare to read the manual after purchasing a new game. Instead we just started playing, moving the joystick around to see what moved, how it moved, and what the button did. What actions generated score, or other positive rewards and what actions were detrimental. We could intuitively figure out how to play by simply exploring. Some games required exploring and the game state only changed if the player moved. Other games had hidden chambers, secret passages that meant walking into what appeared to be a wall, but in game reality were illusions. Experimentation and exploration, required components for many games, is necessary in any agent.

In 2015, DeepMind released a paper on Double-Q leaning that produced the best agent that could beat the human normalized score for 29 of the 57 games. These were mostly the move and shot at targets while avoiding being shot games (Galaga like) or the move through the maze while avoiding the bad guys games (Pacman like.) In the next version they added short-term memory so an agent could recognize and remember previous actions for on-policy learning (LSTM) and off-policy (DRQN). The next very successful version added an exploration module that allowed the agent to explore using various techniques to try to find how to achieve the goal or change the state of the screen. A good example of this is Montezuma’s Revenge in which the player can stay in the same place for as long as they choose without dying or changing the score. To an agent without exploration, the agent soon learns not to move on this screen as invariably it leads to death. This is because this game’s setting is a cave and many exits are illusionary walls that the player can only find by bumping into every wall to determine if it is real or not. Other screens are completely dark (no light source) but there are still obstacles, structure, and exits though they can only be “felt,” not seen. Finally, DeepMind added episodic memory in order to allow the agent to switch from “learned” mode to “explore” mode when new and different looking screens were discovered. They coupled this with a density model in the exploration engine for the agent to be able to judge how often it had seen a specific element or one like it (familiarity.) This allowed the agent to use the exploration mode to try to change the game state, without this the agent might get stuck doing nothing or a few movements that had no effect, essentially getting “stuck” in a game that required moving from screen to screen like Tutankham, Pitfall!, or Krull.

In the latest incarnation of DeepMind’s Atari 2600 agent they added a meta-controller. Many games require exploration to learn what enemies are valued over others, such as Fishing Derby where the deeper fish are worth more than the shallow fish, and really don’t represent that much more difficulty, only more time. That can be handled in easy games that are static, but not games that have multiple screens like Tutankham or Pitfall! that need to first be fully explored before optimal play can be achieved. Other times it is necessary to balance short-term and long-term goals to optimize game play. Such games as Solaris that only give rewards at the end of a long series of events requires focusing on long-term goals versus games like Astrerix (Taz in the United States) where the only goal is to touch one set of icons while avoiding another. To handle exploration versus learned and short versus long term rewards the concept of a “time horizon” that switches between the various modes to help seek a long term goal versus a short term goal and governs how long to play in learned mode versus how long to stay in explore mode to change the game state. As it turns out, for many games, reaching the highest possible score in the least amount of time means first fully exploring the game, then picking which long-term or short-term strategies lead to optimal play. DeepMind solved this using a type of algorithm from stochastic decision theory called sliding-window upper-confidence bound which is a way to measure the potential of an action by valuing both the previous rewards received but overtime increasing the value of untried actions. In this manner all actions are tried but those that offer no reward or low reward are quickly removed. With this addition to their last agent (Never Give Up – NGU), they created Agent57.

So how did it do? We’ll look at that next week, and dive into the decision on whether Agent57 is a true specialized AI.